Relation To Other Quantities

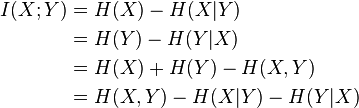

Mutual information can be equivalently expressed as

where H(X) and H(Y) are the marginal entropies, H(X|Y) and H(Y|X) are the conditional entropies, and H(X,Y) is the joint entropy of X and Y. Using the Jensen's inequality on the definition of mutual information, we can show that I(X;Y) is non-negative; so consequently, H(X) ≥ H(X|Y).

Intuitively, if entropy H(X) is regarded as a measure of uncertainty about a random variable, then H(X|Y) is a measure of what Y does not say about X. This is "the amount of uncertainty remaining about X after Y is known", and thus the right side of the first of these equalities can be read as "the amount of uncertainty in X, minus the amount of uncertainty in X which remains after Y is known", which is equivalent to "the amount of uncertainty in X which is removed by knowing Y". This corroborates the intuitive meaning of mutual information as the amount of information (that is, reduction in uncertainty) that knowing either variable provides about the other.

Note that in the discrete case H(X|X) = 0 and therefore H(X) = I(X;X). Thus I(X;X) ≥ I(X;Y), and one can formulate the basic principle that a variable contains at least as much information about itself as any other variable can provide.

Mutual information can also be expressed as a Kullback-Leibler divergence, of the product p(x) × p(y) of the marginal distributions of the two random variables X and Y, from p(x,y) the random variables' joint distribution:

Furthermore, let p(x|y) = p(x, y) / p(y). Then

Thus mutual information can also be understood as the expectation of the Kullback-Leibler divergence of the univariate distribution p(x) of X from the conditional distribution p(x|y) of X given Y: the more different the distributions p(x|y) and p(x), the greater the information gain.

Read more about this topic: Mutual Information

Famous quotes containing the words relation to, relation and/or quantities:

“A theory of the middle class: that it is not to be determined by its financial situation but rather by its relation to government. That is, one could shade down from an actual ruling or governing class to a class hopelessly out of relation to government, thinking of gov’t as beyond its control, of itself as wholly controlled by gov’t. Somewhere in between and in gradations is the group that has the sense that gov’t exists for it, and shapes its consciousness accordingly.”

—Lionel Trilling (1905–1975)

“Whoever has a keen eye for profits, is blind in relation to his craft.”

—Sophocles (497–406/5 B.C.)

“The Walrus and the Carpenter

Were walking close at hand:

They wept like anything to see

Such quantities of sand:

“If this were only cleared away,”

They said, “it would be grand!”

“If seven maids with seven mops

Swept it for half a year,

Do you suppose,” the Walrus said,

“That they could get it clear?”

“I doubt it,” said the Carpenter,

And shed a bitter tear.”

—Lewis Carroll [Charles Lutwidge Dodgson] (1832–1898)